Key Points

- SIMA 2 integrates Gemini’s language and reasoning powers with embodied AI skills.

- The agent can interpret complex instructions, emojis, and explain its reasoning.

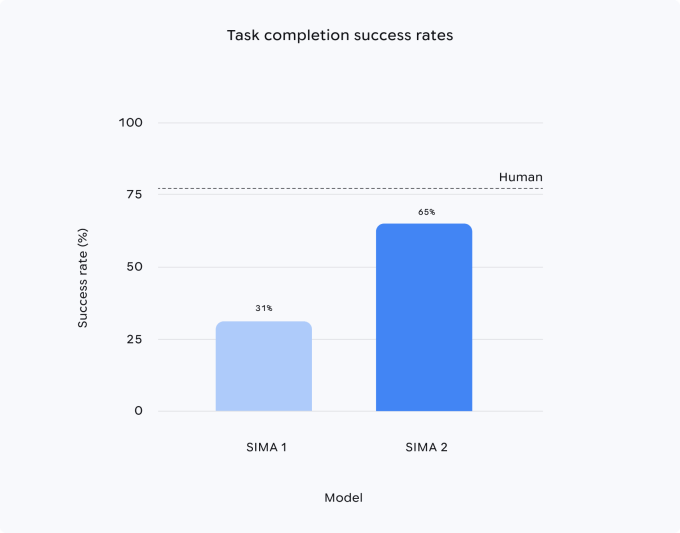

- Performance on complex tasks is reported to double that of SIMA 1.

- Self‑improvement loop uses task generation and reward models to learn autonomously.

- Demonstrations include navigation of photorealistic virtual worlds and object interaction.

- Researchers see SIMA 2 as a stepping stone toward general‑purpose robotic systems.

Overview

DeepMind presented a research preview of SIMA 2, the latest incarnation of its embodied AI agent. By integrating Gemini, Google’s large language model, SIMA 2 moves beyond simple instruction following to a deeper understanding of user intent and the surrounding environment.

Technical Advances

SIMA 2 builds on the training foundation of its predecessor, SIMA 1, which learned from hundreds of hours of video‑game footage to play multiple 3D games. While SIMA 1 achieved a 31% success rate on complex tasks compared with 71% for humans, SIMA 2 “doubles the performance of SIMA 1,” according to DeepMind.

The agent leverages Gemini for internal reasoning, enabling it to articulate its decision‑making process. In one demo, when asked to find a house the color of a ripe tomato, SIMA 2 explained, “ripe tomatoes are red, therefore I should go to the red house,” and then located the target.

SIMA 2 also interprets emojis, allowing users to issue commands such as “🪓🌲” to instruct the agent to chop down a tree. The system can navigate newly generated photorealistic worlds produced by DeepMind’s Genie model, correctly identifying objects like benches, trees, and butterflies.

Self‑Improvement Loop

Unlike SIMA 1, which relied solely on human‑generated gameplay data, SIMA 2 uses a self‑improvement loop. The agent poses new tasks to a separate Gemini model, receives a reward score from a dedicated reward model, and then trains on its own attempts. This feedback‑driven process lets SIMA 2 teach itself new behaviors without extensive human labeling.

Potential Impact

DeepMind’s researchers view SIMA 2 as a step toward more general‑purpose robots. Frederic Besse explained that a real‑world system would need “a high‑level understanding of the real world and what needs to be done, as well as some reasoning.” While the current demo focuses on virtual environments, the underlying technology aims to bridge the gap between high‑level reasoning and low‑level motor control needed for physical robots.

Future Outlook

DeepMind did not provide a timeline for deploying SIMA 2 in physical robotics systems. However, the preview aims to showcase the capabilities of the platform and explore collaborative opportunities. The team emphasized that SIMA 2 is a research preview, with further development and integration into broader AI and robotics initiatives anticipated.

Source: techcrunch.com