Key Points

- Google must double AI serving capacity every six months.

- Goal: increase compute, storage, and networking roughly a thousand‑fold in 4‑5 years.

- Amin Vahdat stresses maintaining cost, power, and energy efficiency.

- OpenAI plans six new U.S. data centers, investing over $400 billion for 7 GW capacity.

- OpenAI serves 800 million weekly ChatGPT users, facing usage limits on advanced features.

- Google aims for infrastructure that is more reliable, performant, and scalable than competitors.

- Key challenge: scaling while keeping costs and energy consumption stable.

Scaling AI Infrastructure at Breakneck Speed

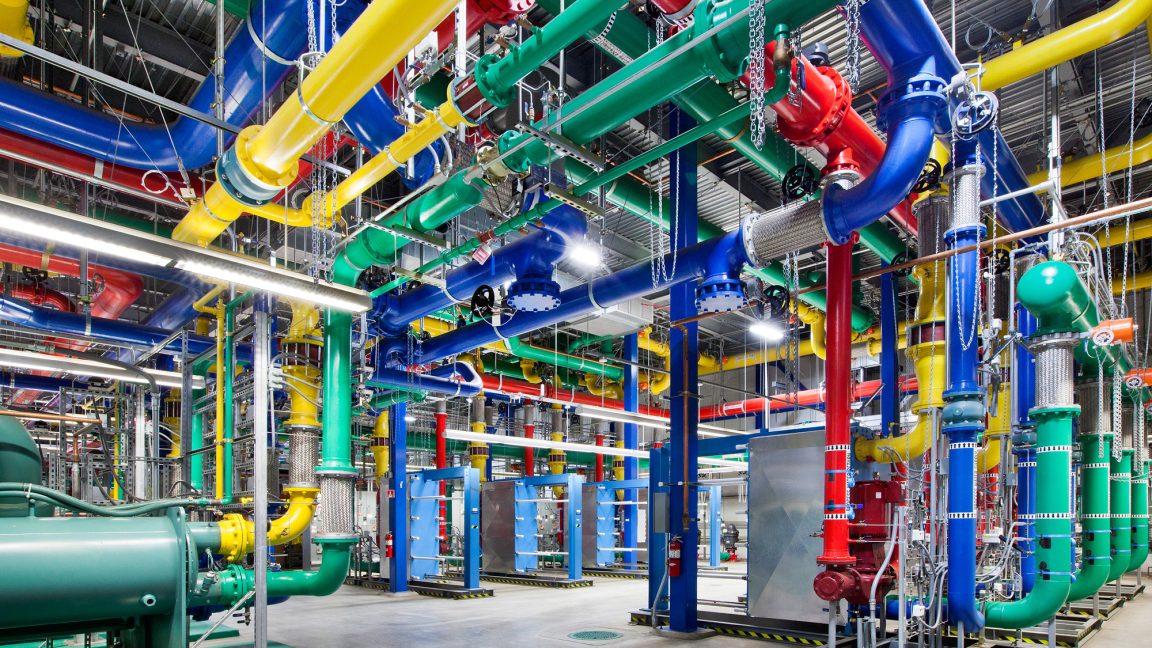

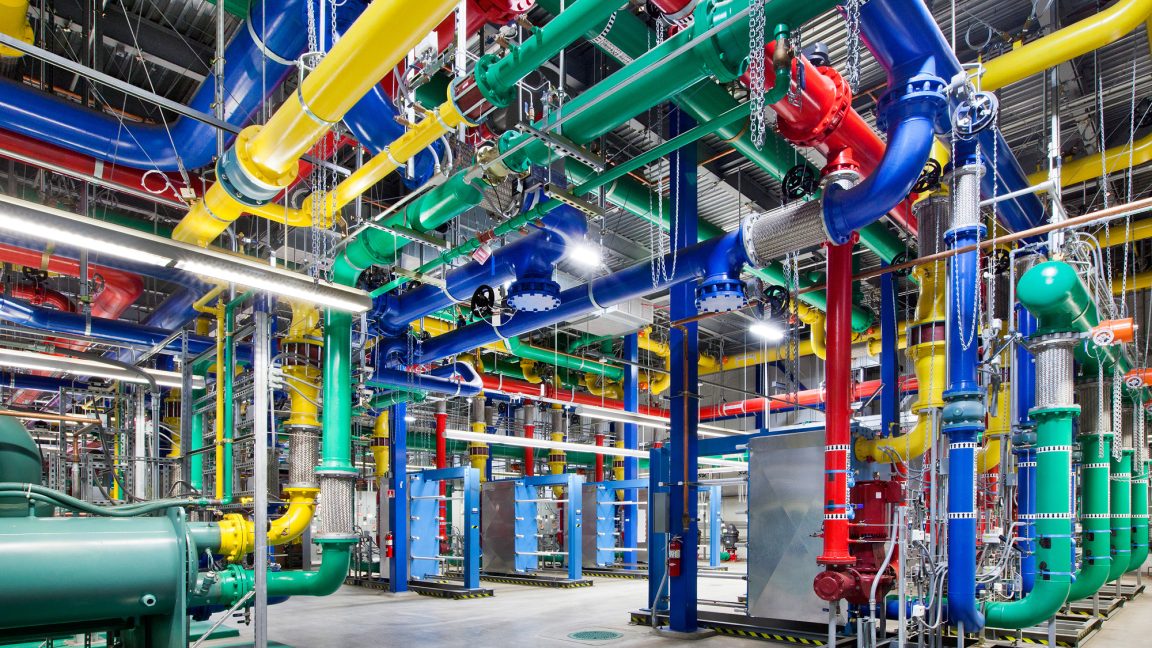

During an all‑hands meeting, Google’s AI infrastructure head Amin Vahdat announced that the company must double its serving capacity every six months to satisfy the rapid growth in artificial‑intelligence services. Vahdat, a vice president at Google Cloud, presented slides indicating the need to scale “the next 1000x in 4‑5 years.” This ambitious target reflects the pressure to deliver more compute, storage, and networking power while keeping costs, power consumption, and energy usage essentially unchanged.

Vahdat emphasized that achieving such growth will not be easy, but he expressed confidence that collaboration and co‑design across the organization will make it possible. He highlighted the goal of building infrastructure that is “more reliable, more performant and more scalable than what’s available anywhere else.”

Competitive Landscape

Google’s scaling effort is taking place in a fiercely competitive environment. OpenAI, a major rival, is planning to build six massive data centers across the United States through its Stargate partnership with SoftBank and Oracle. The company has pledged over $400 billion in the next three years to achieve nearly 7 gigawatts of capacity, aiming to support its 800 million weekly ChatGPT users. Even paid subscribers regularly encounter usage limits for advanced features such as video synthesis and simulated reasoning models.

The race to expand AI infrastructure is described as the most critical and expensive part of the broader AI competition. While Google acknowledges that it will spend heavily, the focus is on delivering a platform that can meet demand without sacrificing reliability or efficiency.

Challenges and Constraints

Key constraints identified by Vahdat include the need to increase capability, compute, and storage networking “for essentially the same cost and increasingly, the same power, the same energy level.” The company must balance rapid capacity expansion with sustainability and cost‑effectiveness. Additionally, it remains unclear how much of the projected demand stems from organic user interest versus internal integration of AI features into existing services such as Search, Gmail, and Workspace.

Nevertheless, Google is committed to meeting the scaling targets, aiming to stay ahead of competitors by delivering a superior AI infrastructure that can handle the growing workloads of both external customers and internal product teams.

Source: arstechnica.com