Key Points

- Trainium2 has surpassed a million chips in production and serves over 100,000 companies.

- The chip line generates multi‑billion‑dollar revenue, according to Amazon executives.

- Anthropic’s Project Rainier utilizes more than 500,000 Trainium2 chips for model training.

- Amazon unveiled Trainium3 at re:Invent, promising four‑times the speed and lower power usage.

- Amazon positions Trainium’s price‑performance as a compelling alternative to Nvidia GPUs.

- Only a few U.S. firms have the full stack needed to challenge Nvidia’s AI‑chip dominance.

Amazon’s Trainium Business Gains Traction

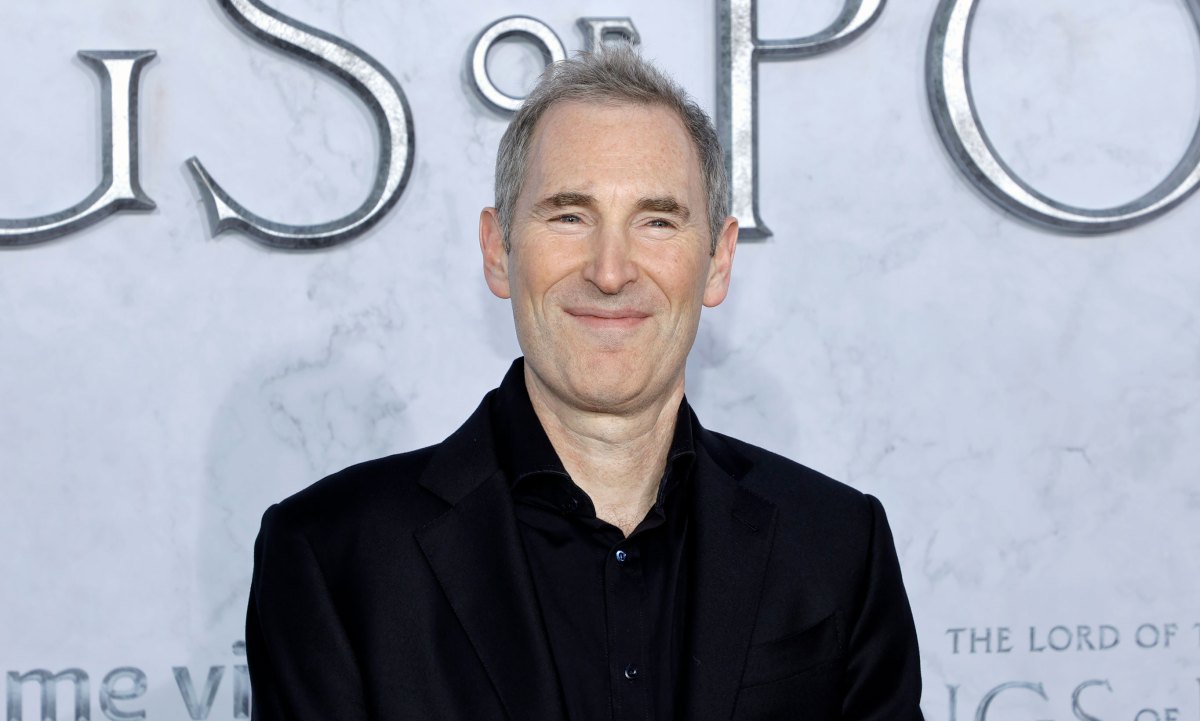

Amazon CEO Andy Jassy announced that the company’s Nvidia‑competitor AI chip, Trainium2, has become a multi‑billion‑dollar revenue run‑rate business. He cited “substantial traction,” with more than one million chips in production and over 100,000 companies using the chip, especially through Amazon’s Bedrock AI app development platform.

Jassy emphasized the chip’s price‑performance advantages over other GPU options, positioning it as a cost‑effective alternative for cloud customers.

Key Partnerships and Customer Wins

AWS CEO Matt Garman highlighted Anthropic as a major revenue driver, noting that the partnership’s Project Rainier deployment involves over 500,000 Trainium2 chips to train the next generation of Claude models. Anthropic has made AWS its primary model‑training partner, although it also runs on Microsoft’s cloud with Nvidia chips.

OpenAI also uses AWS, but its workload runs on Nvidia hardware, limiting its impact on Trainium revenue.

Next‑Generation Chip Reveal

At the AWS re:Invent conference, Amazon introduced Trainium3, which the company claims is four times faster and consumes less power than Trainium2.

Amazon’s broader strategy includes building chips that can interoperate with Nvidia GPUs, aiming to broaden adoption while navigating the entrenched CUDA software ecosystem that currently favors Nvidia.

Competitive Landscape

Only a handful of U.S. firms—Google, Microsoft, Amazon, and Meta—possess the combined expertise in silicon design, high‑speed interconnects, and networking needed to compete seriously with Nvidia.

Amazon’s focus on delivering lower‑cost, high‑performance AI hardware reflects its classic approach of leveraging homegrown technology to challenge market leaders.

Source: techcrunch.com