Key Points

- All AI agents successfully implemented Minesweeper’s core chording mechanic.

- On‑screen instructions were provided for both desktop and mobile browsers.

- Flagging options included cycling through “?” marks and long‑press for mobile.

- Graphics remained retro but were simplistic, using basic symbols for mines and flags.

- Sound effects mimicked late‑’80s PC beeps, with an option to mute.

- A “Lucky Sweep Bonus” button offered a free safe tile after large clearings.

- OpenAI Codex took about twice as long to produce a functional version as Claude Code.

- Terminal interfaces featured command shortcuts and progress animations.

- The test highlighted both AI potential and current limitations in UI polish.

Overview

In a recent experiment, four artificial‑intelligence coding agents were asked to recreate the iconic Minesweeper puzzle game. The goal was to see how well these agents could handle both the logical underpinnings of the game and the user‑interface details that make the experience familiar to longtime players.

Feature Implementation

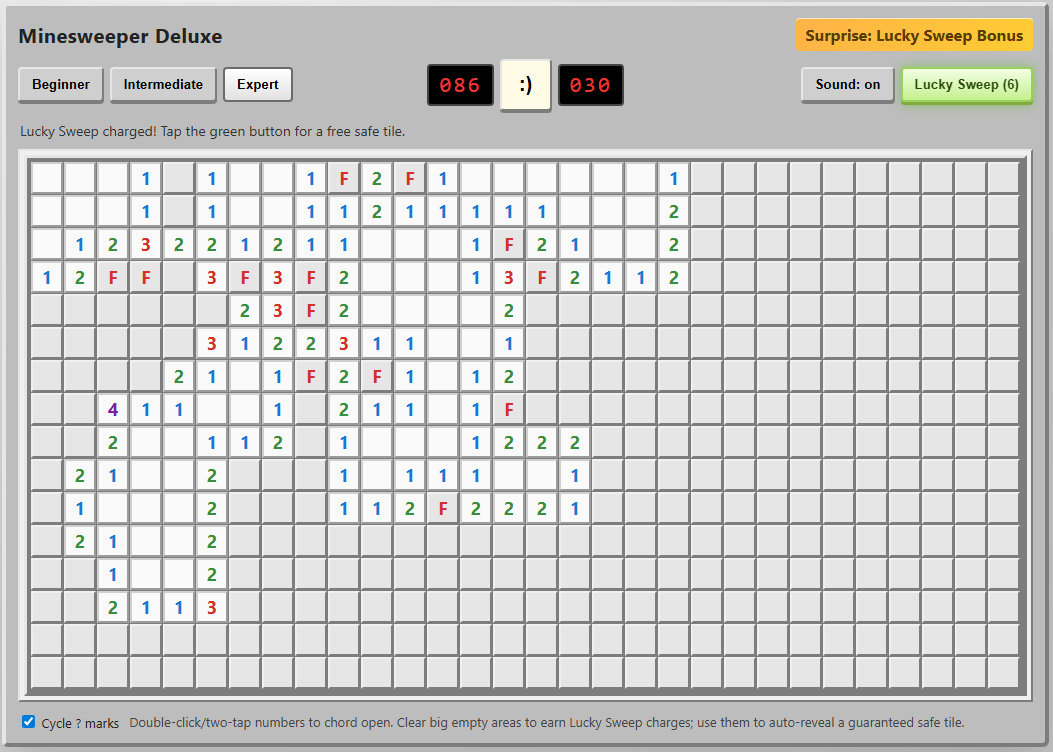

The agents succeeded in embedding essential gameplay elements. All versions included the crucial “chording” feature, allowing players to reveal surrounding squares when a number matched the count of adjacent flags. They also provided on‑screen instructions for both desktop browsers and mobile devices, a nod to the original game’s cross‑platform reach.

Flagging mechanics were enhanced in creative ways. One agent introduced an option to cycle through “?” marks when marking squares, a nuance that even many human‑made clones overlook. For mobile users, the ability to hold a finger down on a square to place a flag was added, improving handheld usability.

User Experience

The visual presentation stayed true to the retro aesthetic, featuring the familiar smiley‑face reset button. However, the graphics were simplistic, using a plain asterisk for revealed mines and a red “F” for flagged tiles, which some testers found unattractive.

Sound effects evoked the nostalgic beeps of late‑’80s PCs, and the game offered an option to mute them. A “Surprise: Lucky Sweep Bonus” button was also included, granting a free safe tile when available. This feature proved useful in tight situations but was only triggered after clearing a large area with a single click, making it feel more like a reward for luck than a balanced strategic tool.

Performance Comparison

When comparing development speed, OpenAI’s Codex required roughly twice as much time to produce a functional Minesweeper clone as Claude Code did. Both platforms provided terminal‑style interfaces with commands for permission management and progress animations, but Codex’s longer development time may reflect differences in underlying model capabilities or workflow efficiency.

Conclusion

The experiment demonstrates that AI coding agents can faithfully reproduce classic games, handling core mechanics and even adding thoughtful UI touches. Yet variations in visual polish, optional features, and development speed indicate that human oversight remains valuable. As AI tools continue to evolve, they promise to become increasingly reliable partners in software creation, though they are not yet a complete substitute for experienced developers.

Source: arstechnica.com