Key Points

- Microsoft has begun deploying its own Maia 200 AI inference chip in data centers.

- The Maia 200 is described as an AI inference powerhouse and claims higher performance than Amazon Trainium and Google TPU chips.

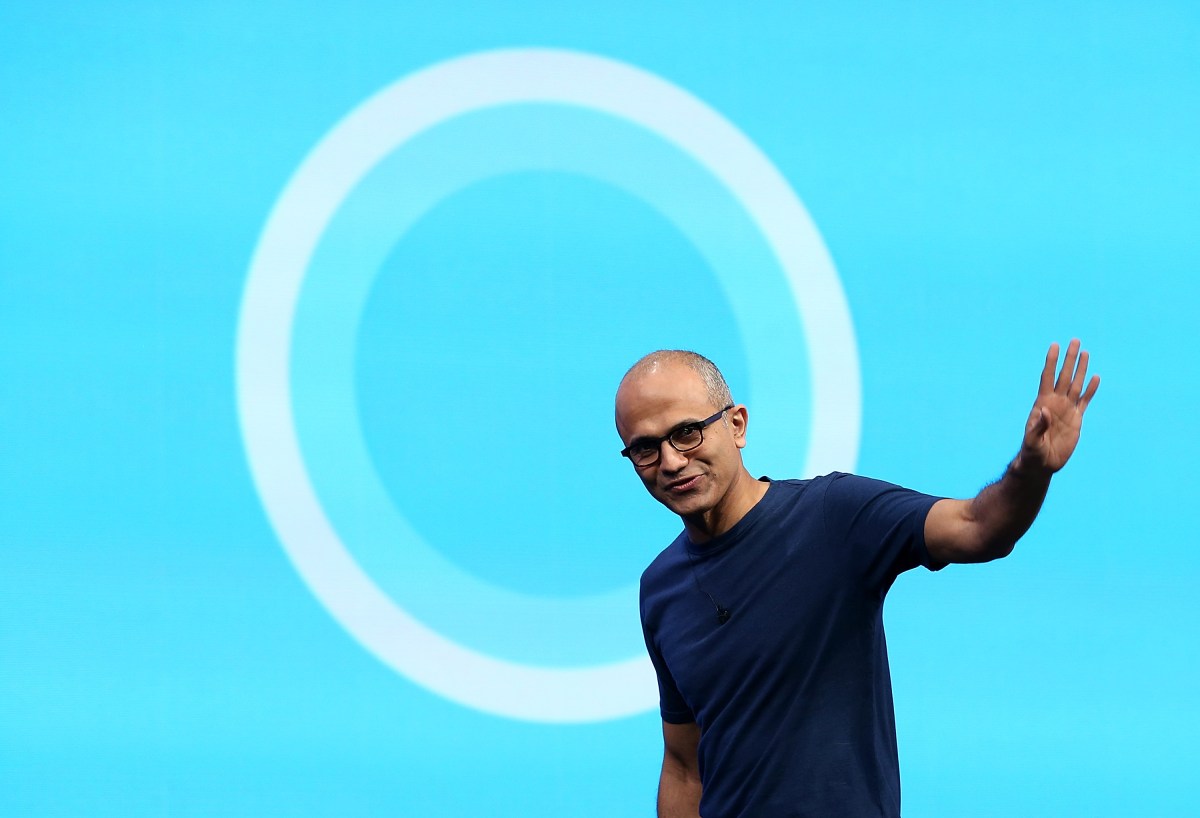

- CEO Satya Nadella said Microsoft will continue buying GPUs from Nvidia and AMD despite the new chip.

- The Superintelligence team, led by Mustafa Suleyman, will be the first internal users of the Maia 200.

- Maia 200 will also support OpenAI models on Microsoft Azure.

- The strategy combines in‑house chip development with existing supplier partnerships to address supply challenges.

Microsoft Deploys Its First In‑House AI Chip

Microsoft recently installed the initial batch of its own AI inference processor, the Maia 200, in a data center and plans to expand the rollout in the coming months. The chip is marketed as an “AI inference powerhouse,” optimized for the demanding compute work of running AI models in production environments.

Performance Claims and Competitive Landscape

According to Microsoft, the Maia 200 outperforms competing offerings such as Amazon’s Trainium chips and Google’s Tensor Processing Units. The company, like other cloud giants, is pursuing custom silicon to address the difficulty and expense of securing the latest hardware from Nvidia, a supply crunch that persists.

Continued Partnerships With Nvidia and AMD

Despite the launch of its own chip, Microsoft CEO Satya Nadella affirmed that the firm will keep buying GPUs from Nvidia and AMD. He highlighted the value of those partnerships, noting that both companies are innovating alongside Microsoft. Nadella explained that vertical integration does not mean exclusive reliance on internal components; the company will continue to source external chips as part of its broader strategy.

First Internal Use by the Superintelligence Team

The Maia 200 will initially be used by Microsoft’s Superintelligence team, which is tasked with developing the company’s frontier AI models. Mustafa Suleyman, former co‑founder of Google DeepMind and current leader of the team, announced that his group will receive the first access to the new processor. He posted on X that the launch marked a “big day” for the team.

Support for OpenAI Models on Azure

Microsoft also indicated that the Maia 200 will support OpenAI models running on the Azure cloud platform, ensuring that customers can benefit from the new hardware even when using external AI services.

Strategic Implications

The dual approach of building proprietary silicon while maintaining strong supplier relationships reflects Microsoft’s aim to stay ahead in the rapidly evolving AI hardware market. By leveraging both internal and external technologies, the company seeks to mitigate supply risks and accelerate its AI capabilities across internal projects and cloud services.

Source: techcrunch.com