Key Points

- AI‑generated videos of Hurricane Melissa are spreading rapidly on TikTok, Instagram and WhatsApp.

- Many clips include OpenAI’s Sora watermark, indicating they were created with AI tools.

- The fabricated footage shows extreme flooding and rescue scenes that have not occurred.

- Authorities urge reliance on official sources such as the Jamaica Information Service for accurate updates.

- Tips to verify content include checking the source, looking for timestamps, and cross‑checking with reputable news outlets.

- Users are advised to pause before sharing sensational videos to avoid spreading panic.

- The incident underscores the growing challenge of deepfake media in disaster situations.

AI-Generated Storm Footage Floods Social Media

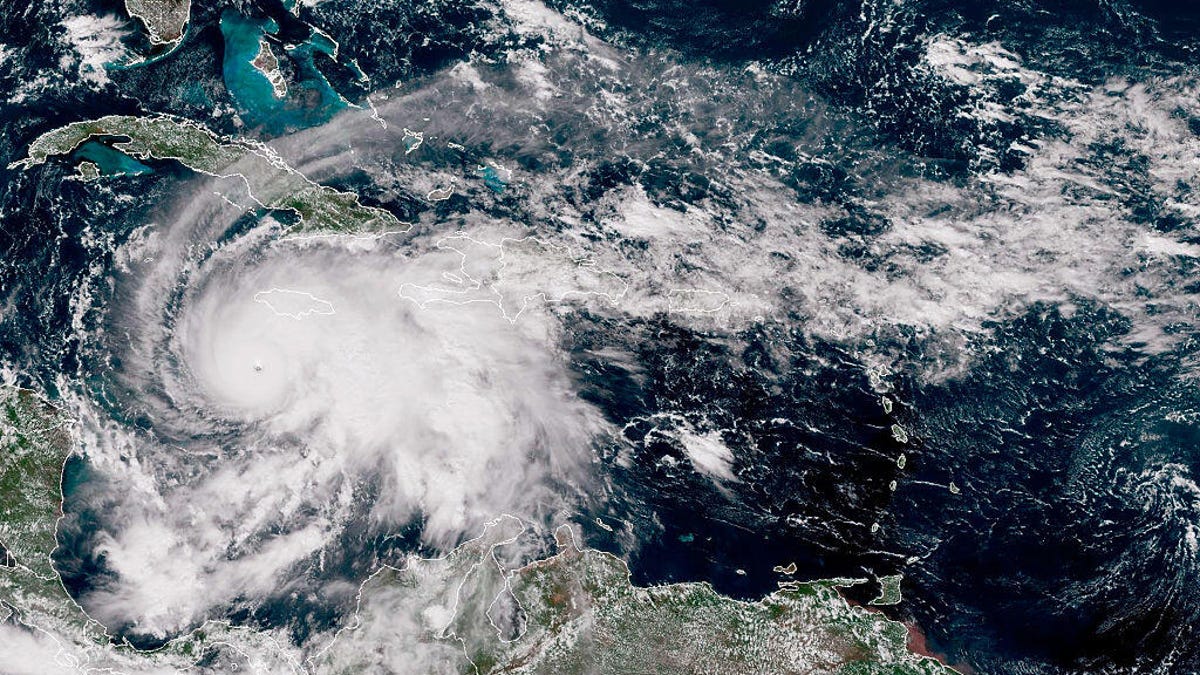

In the lead‑up to Hurricane Melissa’s landfall on Jamaica, social networks have become awash with dramatic videos that portray extreme flooding, collapsing structures and rescue operations that never occurred. Platforms including TikTok, Instagram and WhatsApp are seeing these clips amass millions of views within hours. Many of the videos are spliced from earlier storm recordings, while others are produced entirely by text‑to‑video AI tools. Several of the clips bear a watermark identifying them as creations of OpenAI’s Sora video generator.

Impact on Public Perception and Safety

The proliferation of fabricated storm footage is sowing confusion and fear. Viewers encountering vivid, yet false, depictions of the hurricane’s effects may panic, spread misinformation further, or divert attention from verified alerts. In crisis moments, accurate information is vital for personal safety and for emergency responders coordinating relief efforts.

Official Guidance and Verification Strategies

Jamaican officials stress the importance of consulting trusted channels for real‑time updates. The Jamaica Information Service, the Office of Disaster Preparedness and Emergency Management, and the Prime Minister’s office provide reliable information on the storm’s trajectory and safety instructions. To discern authentic content, users are advised to check the source of a video, look for timestamps or recognizable media branding, and be alert for the Sora watermark that signals AI generation. Cross‑checking footage against official meteorological reports and reputable news outlets such as the BBC, Reuters or the Associated Press can further confirm authenticity.

Recommendations for Responsible Sharing

Experts recommend pausing before reposting sensational videos, especially when their origin is unclear. Relying on local radio stations, emergency alerts and official government feeds is the safest way to stay informed. By exercising caution and verifying sources, the public can help limit the spread of harmful misinformation during the hurricane.

Broader Implications of AI‑Driven Misinformation

The Hurricane Melissa episode illustrates how advances in AI video generation are reshaping the landscape of disaster reporting. The ability to produce realistic‑looking storm scenes in seconds poses a new challenge for media literacy and emergency communication. As AI tools become more accessible, distinguishing truth from fabricated content will demand greater vigilance from both platforms and users.

Source: cnet.com