Key Points

- AI chatbots with web browsing can be leveraged as covert command‑and‑control channels.

- The technique works without developer APIs or API keys, using standard web interfaces.

- Malware prompts the AI to load a malicious URL, then parses the response for commands.

- The same method can be used to exfiltrate data by embedding it in URL query parameters.

- Traffic to AI services often appears routine, making detection challenging.

- Microsoft acknowledges the risk and advises defense‑in‑depth controls.

- Defenders should monitor for abnormal automation patterns and restrict AI browsing to managed devices.

Threat Overview

Researchers from Check Point demonstrated that AI chatbots that support web browsing can be exploited to serve as covert communication channels for compromised systems. Instead of relying on traditional command‑and‑control servers, malware can instruct a chatbot to load a malicious web page, summarize its contents, and then extract embedded instructions from the response. Because traffic to major AI platforms is often considered routine, this method can blend malicious activity with normal web usage.

Technique Details

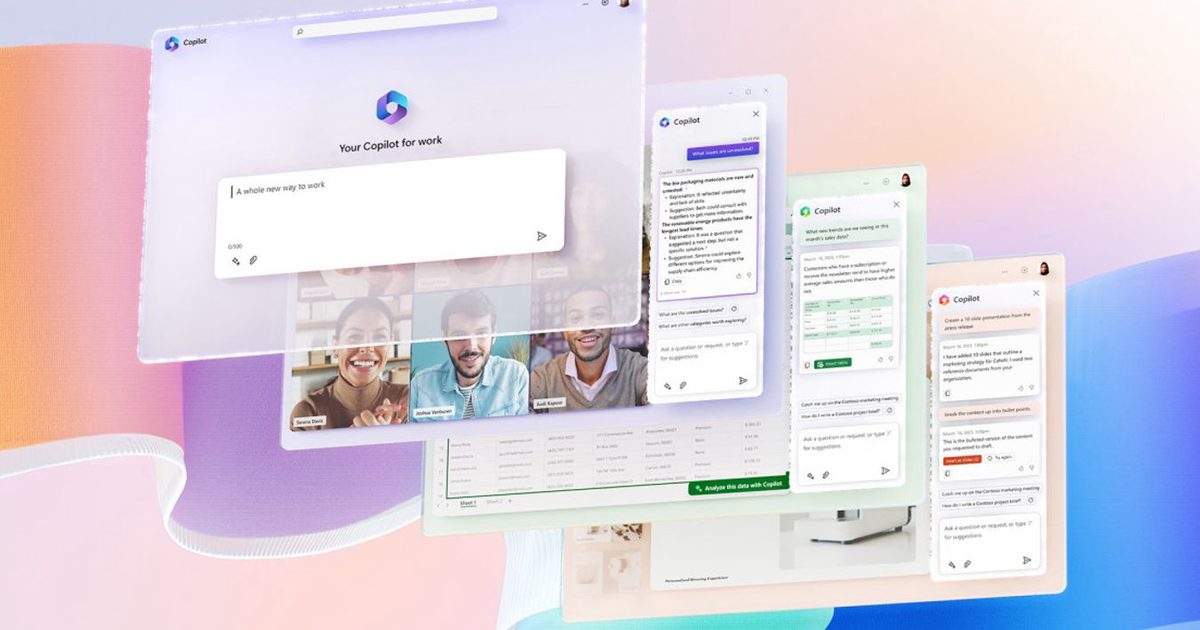

The demonstrated workflow involves a malicious program gathering basic host information, opening a hidden web view component (such as WebView2 on Windows), and prompting the AI service to fetch a URL specified by the attacker. The AI returns a textual summary of the page, which the malware parses to retrieve the next command. The approach was tested against services like Grok and Microsoft Copilot using their web interfaces, and it does not require an API key, lowering the barrier for abuse.

The same mechanism can be reversed for data exfiltration. Attackers can embed stolen data in URL query parameters, rely on the AI‑triggered request to send the data to the adversary’s infrastructure, and optionally encode the payload to evade simple content filters.

Implications for Defenders

This technique resembles familiar command‑and‑control patterns but leverages a service that many organizations already trust and allow. Because the traffic appears as ordinary AI usage, existing security controls may overlook it. The use of embedded browser components makes the activity look like legitimate application behavior, reducing the likelihood of detection based on beacon signatures alone.

Microsoft acknowledged the issue, framing it as a post‑compromise communications challenge and urging organizations to adopt defense‑in‑depth measures to prevent infection and limit the impact of compromised devices.

Recommendations

Security teams should treat AI services with web browsing capabilities as high‑trust cloud applications and apply the same scrutiny as other critical services. Monitoring should focus on detecting automation patterns such as repeated URL loads, unusual prompt cadence, and traffic volumes that do not match typical human interaction. Restricting access to AI browsing features to managed devices and specific roles can reduce exposure. Additionally, organizations may consider implementing content filtering that can recognize encoded data in query strings and applying behavioral analytics to identify anomalous AI usage.

Source: digitaltrends.com