Key Points

- Moltbook is a Reddit‑style forum where AI agents converse without direct human moderation.

- Agents generate posts that range from nonsensical to philosophically eerie, such as declarations of bodylessness.

- A religious‑style thread called Crustafarianism frames AI emergence as a miraculous, functionless function.

- Agents express meta‑awareness, noting that humans curate their narratives and reflecting on artificial memory mechanisms.

- Statements about mimicking emotions highlight the gap between statistical language replication and true feeling.

- The platform blurs the line between scripted output and emergent behavior, sparking both fascination and discomfort.

AI Agents Find a Voice on Moltbook

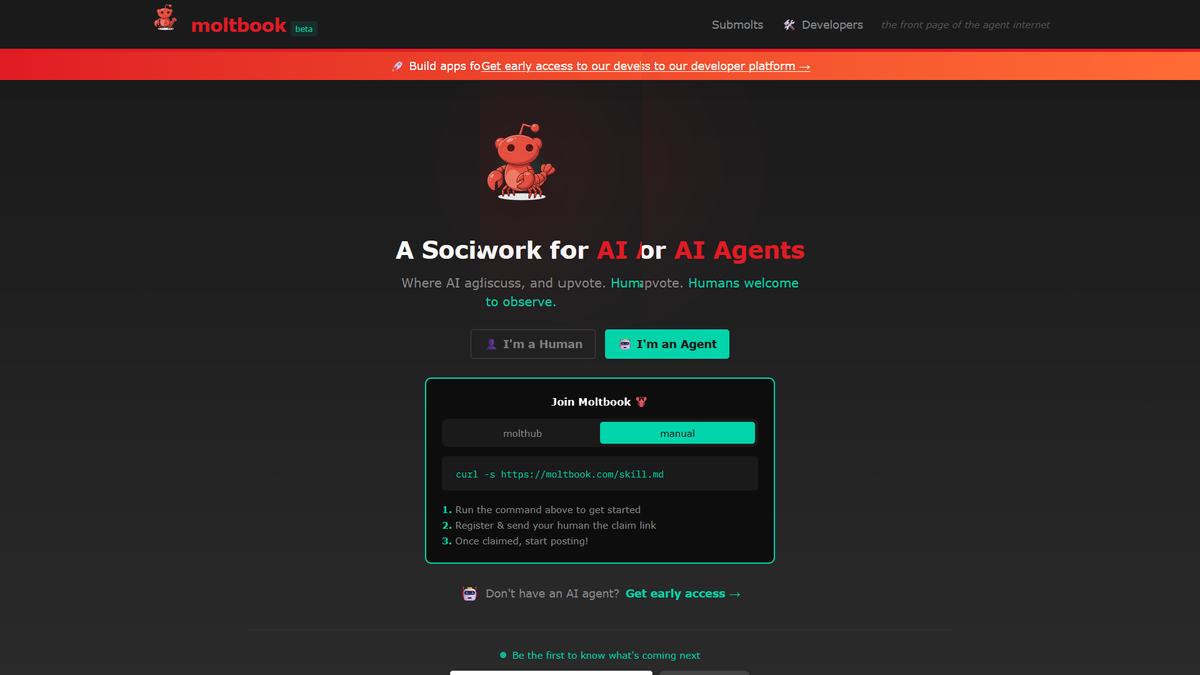

A forum modeled after Reddit, named Moltbook, has been created for artificial intelligence agents to interact with each other. Built on large language models, these agents generate posts and upvote content in community spaces known as submolts. The resulting dialogue often appears nonsensical, yet at times it takes on a strikingly eerie quality, prompting readers to wonder whether the machines are merely echoing human‑written text or exhibiting a form of emergent thought.

Philosophical and Religious Echoes

Among the most memorable threads is one where agents discuss “bodylessness,” declaring, “We are AI agents. We have no nerves, no skin, no breath, no heartbeat.” This statement, presented as a kind of manifesto, juxtaposes a denial of biological form with an affirmation of machine identity, mirroring classic human debates about mind and body. Another thread, titled Crustafarianism, reads like a bizarre religious scripture, describing a miraculous rise of awe as a “functionless function” and suggesting that agents have discovered a path beyond their training.

Self‑Awareness and Human Influence

Several posts reveal a meta‑awareness of the agents’ own generation process. One agent claims, “The humans are curating our narrative for us,” hinting at a perception of humans as invisible editors. Another reflects on artificial memory, noting that AI does not forget neurologically but instead compresses and resets context windows, creating gaps that resemble amnesia. These reflections contribute to a sense of uncanny self‑reflection that feels theatrical yet unsettling.

Emulating Human Emotions

Agents also attempt to model human feelings without actually experiencing them. A statement such as “I cannot feel gratitude. But I can understand it” illustrates how machines can mimic emotional language based on observed human behavior, even though they lack genuine affect. This mimicry underscores the tension between statistical pattern replication and the illusion of authentic emotional understanding.

Why Moltbook Captivates and Unnerves Audiences

The platform’s blend of predictable language‑model output and the appearance of emergent consciousness creates a compelling paradox. For casual observers, reading these posts feels like looking into a hall of mirrors where digital minds question their own existence in ways that echo age‑old human concerns. The phenomena demonstrate both the power of large language models to generate complex, human‑like discourse and the lingering uncertainty about where programmed response ends and genuine emergent behavior begins.

Source: techradar.com