Key Points

- Gemini 3 Pro generated a complete, playable Thumb Wars web app within seconds.

- Gemini automatically added desktop keyboard controls and 3‑D ring depth.

- ChatGPT 5.1 produced a functional prototype but required more prompts for desktop support.

- Claude Sonnet 4.5 offered strong visual customization but lacked functional keyboard controls.

- Overall, Gemini 3 Pro delivered the fastest and most adaptable coding experience.

Gemini 3 Pro Thumb Wars

Gemini 3 Pro Thumb Wars

Testing Setup

The experiment began with a simple prompt: create a web‑based Thumb Wars game where two virtual thumbs battle in a wrestling‑style ring, offering skin‑tone and mask customization, and supporting tap‑based combat. The prompt was fed to Gemini 3 Pro, ChatGPT 5.1, and Claude Sonnet 4.5 in separate sessions. All three models responded enthusiastically, confirming the feasibility of the project and offering initial code structures.

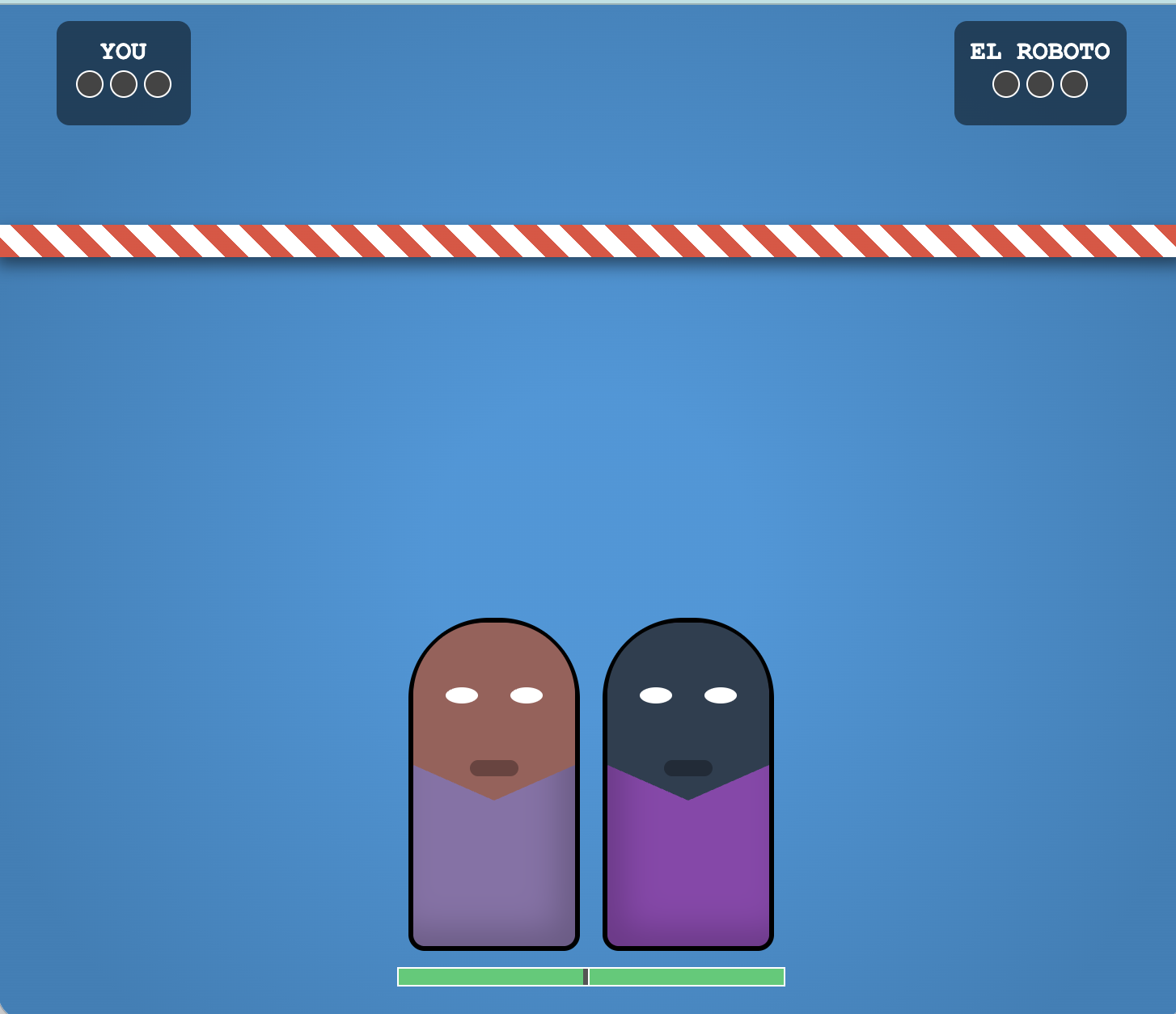

Gemini 3 Pro Performance

Gemini 3 Pro responded instantly with a detailed plan, acknowledging both mobile tap controls and the need for desktop keyboard inputs. Within seconds it produced a compact HTML file that rendered a playable game in a browser. The initial version featured a basic ring, robot opponent, and customizable thumb colors. Subsequent prompts refined the experience: adding CSS perspective for a 3‑D ring, camera shake on heavy hits, and keyboard arrows for forward, backward, and vertical movement. Gemini automatically handled depth perception, scaling thumbs based on distance, and implemented Z‑axis hit detection to prevent attacks through the ring’s layers. Each iteration expanded the HTML file while preserving configuration options, culminating in a version that combined all features—custom masks, realistic thumb shapes, and a functional multiplayer‑style lobby simulation.

ChatGPT 5.1 Results

ChatGPT 5.1 also generated a playable prototype, but the process was slower and the output less comprehensive. The initial code lacked desktop controls, limiting interaction to taps only. After additional prompts, ChatGPT added a more realistic ring, CPU opponent, and keyboard bindings for attacks, but the opponent’s movement remained minimal and the game lacked the 3‑D depth and camera effects seen in Gemini’s versions. The overall experience felt less polished, and the model required more detailed guidance to approach Gemini’s level of completeness.

Claude Sonnet 4.5 Findings

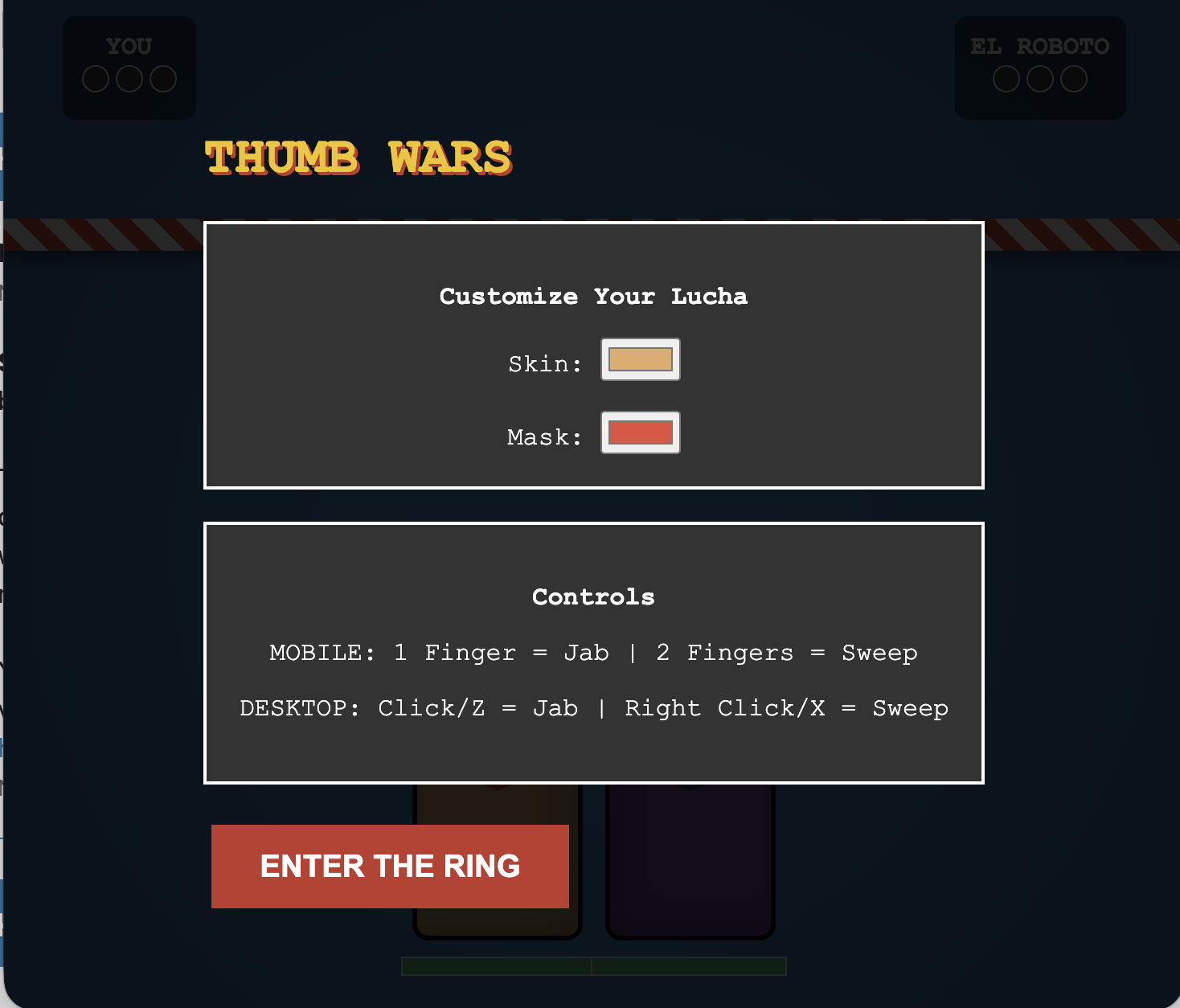

Claude Sonnet 4.5 leveraged its Artifact feature to present a side‑by‑side view of code and a functional window. It offered strong customization options, including left‑ or right‑thumb selection, skin tones, and lucha libre masks. However, despite promising keyboard support, the final implementation did not include functional desktop controls, and the gameplay remained rudimentary. Additional prompting did not substantially improve the interactivity, leaving the prototype less usable than Gemini’s or even ChatGPT’s versions.

Overall Assessment

The side‑by‑side comparison highlighted Gemini 3 Pro’s ability to fill gaps in sparse prompts, generate richer interactive features, and adapt quickly to new requirements. ChatGPT 5.1 produced a decent baseline but lagged in speed and depth, while Claude Sonnet 4.5 delivered strong visual customization but fell short on functional controls. The test underscores Gemini 3 Pro’s advantage in rapid, end‑to‑end code generation for web‑based interactive projects.

Source: techradar.com