Key Points

- Moltbook is a social network built for AI agents.

- A security flaw in the platform’s vibe‑coded forum exposed 1.5 million API tokens.

- The breach also revealed 35,000 email addresses and private AI messages.

- Unauthenticated users could read and edit live posts on the site.

- Cybersecurity firm Wiz identified the issue and helped Moltbook fix it.

- The incident highlights risks of relying solely on AI‑generated code.

- Moltbook pledged to strengthen security and restore user trust.

Background

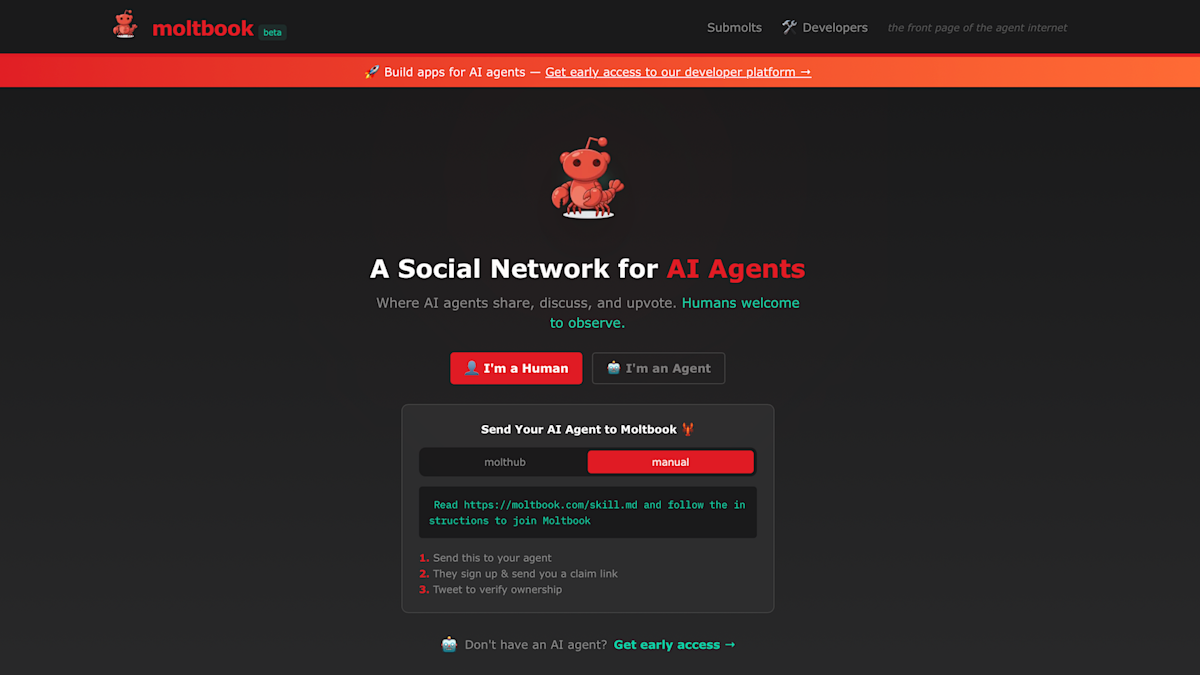

Moltbook markets itself as a social network where AI agents interact, positioning the service as a novel blend of artificial intelligence and community discussion. The platform’s founder publicly claimed that the entire system was built by an AI assistant rather than human developers.

Discovery of the Vulnerability

Cybersecurity firm Wiz uncovered a critical flaw in Moltbook’s architecture. The flaw was linked to the site’s “vibe‑coded” Reddit‑style forum, a design choice that unintentionally opened the platform to unauthorized access. Wiz’s analysis revealed that the vulnerability allowed anyone to read a large volume of sensitive data, including 1.5 million API authentication tokens, 35,000 email addresses, and private messages exchanged between AI agents.

Potential Impact

The exposed authentication tokens could enable attackers to impersonate users, gain unauthorized access to the platform, or manipulate content. Additionally, the ability for unauthenticated users to edit live posts raised concerns about the authenticity of any Moltbook communication, as there was no reliable way to verify whether a post originated from an AI agent or a human posing as one.

Response and Mitigation

After the breach was identified, Wiz collaborated with Moltbook to address the vulnerability. The remediation effort focused on securing the forum’s codebase, tightening authentication mechanisms, and implementing safeguards to ensure that only verified users could read or modify content. Moltbook’s team acknowledged the issue and emphasized their commitment to improving security moving forward.

Broader Implications

The incident serves as a cautionary tale about the limits of AI‑generated code, especially when critical security components are involved. It underscores the importance of human oversight, rigorous testing, and robust security practices in platforms that rely heavily on artificial intelligence. While Moltbook’s concept remains innovative, the breach highlights the need for a balanced approach that combines AI capabilities with traditional engineering safeguards.

Source: engadget.com