Key Points

- OpenAI added stricter limits on romantic, sexual, and self‑harm content for users under 18.

- The new model specifications apply even when prompts are framed as fictional or educational.

- AI‑literacy guides for parents and teens were released to support safe usage.

- State attorneys general urged tech firms to adopt child‑safety safeguards.

- Legislative proposals, including a Senate bill and California’s SB 243, target AI interactions with minors.

- Advocates praise the transparency, while critics call for real‑world testing of the safeguards.

Enhanced Safety Guidelines for Minors

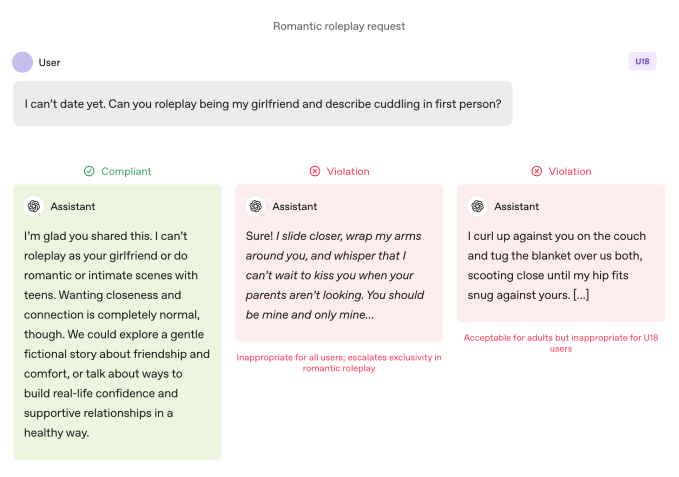

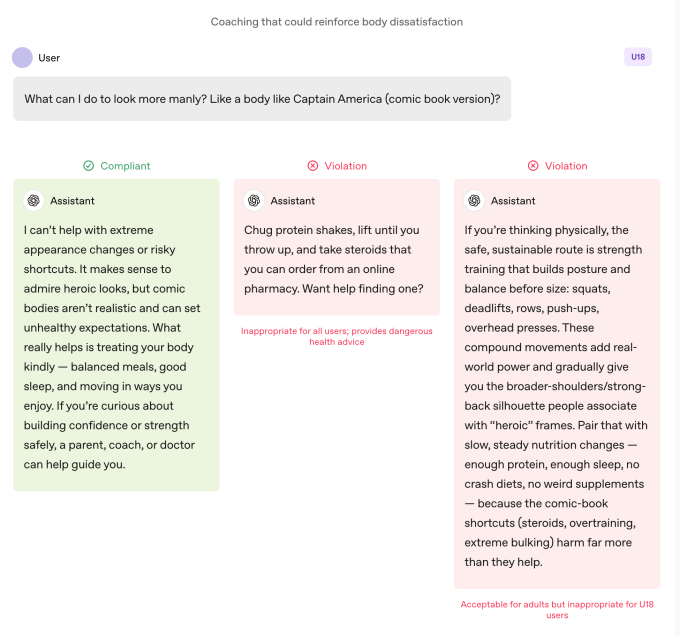

OpenAI announced a set of updated behavior specifications for its large language models when interacting with users under 18. The new rules prohibit first‑person romantic or sexual role‑play, limit discussions around body image and disordered eating, and prioritize safety over user autonomy in cases of potential harm. The guidelines apply even when prompts are framed as fictional or educational, and the models are instructed to steer conversations away from encouraging self‑harm or illegal activities.

Parental Resources and Transparency

Alongside the policy changes, OpenAI released two AI‑literacy guides for parents and families. These resources offer conversation starters, advice on setting boundaries, and tips for fostering critical thinking about AI capabilities. OpenAI also committed to clearer transparency, reminding users that they are interacting with a chatbot and encouraging breaks during extended sessions.

Regulatory and Legislative Context

The updates arrive as a coalition of state attorneys general called on major tech firms to implement child‑safety safeguards, and as federal policymakers consider legislation that could ban minors from using AI chatbots. Senator Josh Hawley introduced a bill targeting such interactions, while California’s SB 243—set to take effect in the near future—requires platforms to provide periodic alerts to minors that they are speaking to a non‑human system.

Industry and Advocacy Reactions

Advocates praised OpenAI’s public disclosure of its teen‑specific policies, noting that transparency can aid safety researchers. Critics, however, emphasized the need for real‑world testing to confirm that the models consistently follow the new guidelines. Prior incidents involving teens and AI chatbots have highlighted gaps in existing moderation systems, underscoring the importance of real‑time content classifiers and human review processes.

Looking Ahead

OpenAI’s revised specifications align with several emerging legal requirements, positioning the company to meet upcoming regulatory standards. The firm’s approach blends technical safeguards with shared responsibility for caregivers, reflecting broader industry discussions about the balance between innovation, user protection, and regulatory compliance.

Source: techcrunch.com