Key Points

- OpenAI launched ChatGPT Health, an AI virtual clinic that can read EMRs and fitness data.

- The service aims to provide personalized advice on labs, diet, exercise, and doctor visits.

- Potential benefits include clearer explanations of medical jargon and quick test‑result analysis.

- Users express distrust due to AI hallucinations and lack of HIPAA coverage.

- Data could be vulnerable to leaks, hacks, or legal subpoenas under consumer‑tech privacy rules.

- Expert Alexander Tsiaras highlights transparency and regulatory alignment as essential.

- ChatGPT Health isolates health chats and offers data deletion, but risks remain outside traditional healthcare systems.

- Widespread adoption may depend on stronger privacy safeguards and clear oversight.

Background

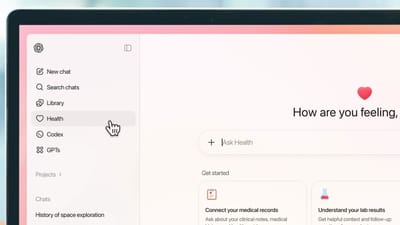

OpenAI launched ChatGPT Health as a dedicated virtual clinic that can ingest electronic medical records (EMRs) and data from fitness and health apps. The feature is designed to deliver personalized responses about lab results, diet, exercise, and preparation for doctor visits. The company markets the service as a way to help the millions of people who ask health and wellness questions each week.

Potential Benefits

Proponents note that the platform could make medical information more accessible. It can translate complex medical jargon into plain language, highlight points worth discussing with a clinician, and quickly parse confusing test results. In an overstretched healthcare system, such capabilities might provide immediate clarity for users who lack easy access to regular medical care.

Privacy and Trust Issues

Despite the promised convenience, many users express hesitation about sharing sensitive health data with a commercial AI provider. The author of the source article emphasizes a lack of trust, noting that AI systems can produce hallucinated or inaccurate answers. OpenAI is not subject to HIPAA, the federal law that governs the protection of medical information, meaning that the platform operates under standard consumer‑tech privacy rules rather than the stricter safeguards that apply to traditional healthcare providers.

Data uploaded to ChatGPT Health could be vulnerable to leaks, hacks, or legal subpoenas. While the service isolates health conversations and allows users to delete their data, the underlying risk remains because the information resides outside the traditional healthcare ecosystem.

Expert Perspective

Alexander Tsiaras, founder and CEO of medical‑data platform StoryMD, stresses that trust will be the central challenge for both individuals and the broader healthcare system. He calls for transparency in how data is ingested, how hallucinations are prevented, and how the platform aligns with established regulatory frameworks. Without such measures, users may be reluctant to entrust a commercial entity with their lifelong health records.

Conclusion

ChatGPT Health offers a glimpse of how AI could augment personal health management, but its success hinges on addressing privacy, security, and reliability concerns. Until robust safeguards and clear regulatory oversight are in place, many potential users remain skeptical about integrating their most sensitive medical information with the service.

Source: techradar.com