Key Points

- AMD detailed the Instinct MI350 series and the flagship MI355X DLC rack at Hot Chips.

- The MI355X DLC rack houses 128 GPUs with a total of 36 TB HBM3e memory.

- Peak performance is rated at 2.6 exaflops of FP4 precision.

- Both air‑cooled and liquid‑cooled node designs were showcased, with an 8‑GPU node reaching up to 80.5 petaflops FP8.

- AMD announced the MI400 accelerator for 2026, featuring HBM4 and higher bandwidth.

- Nvidia’s upcoming Vera Rubin systems were referenced for comparison, targeting up to 3.6 exaflops FP4 inference.

- The announcement highlights AMD’s strategy to provide AI‑centric hardware at scale.

MI355X DLC

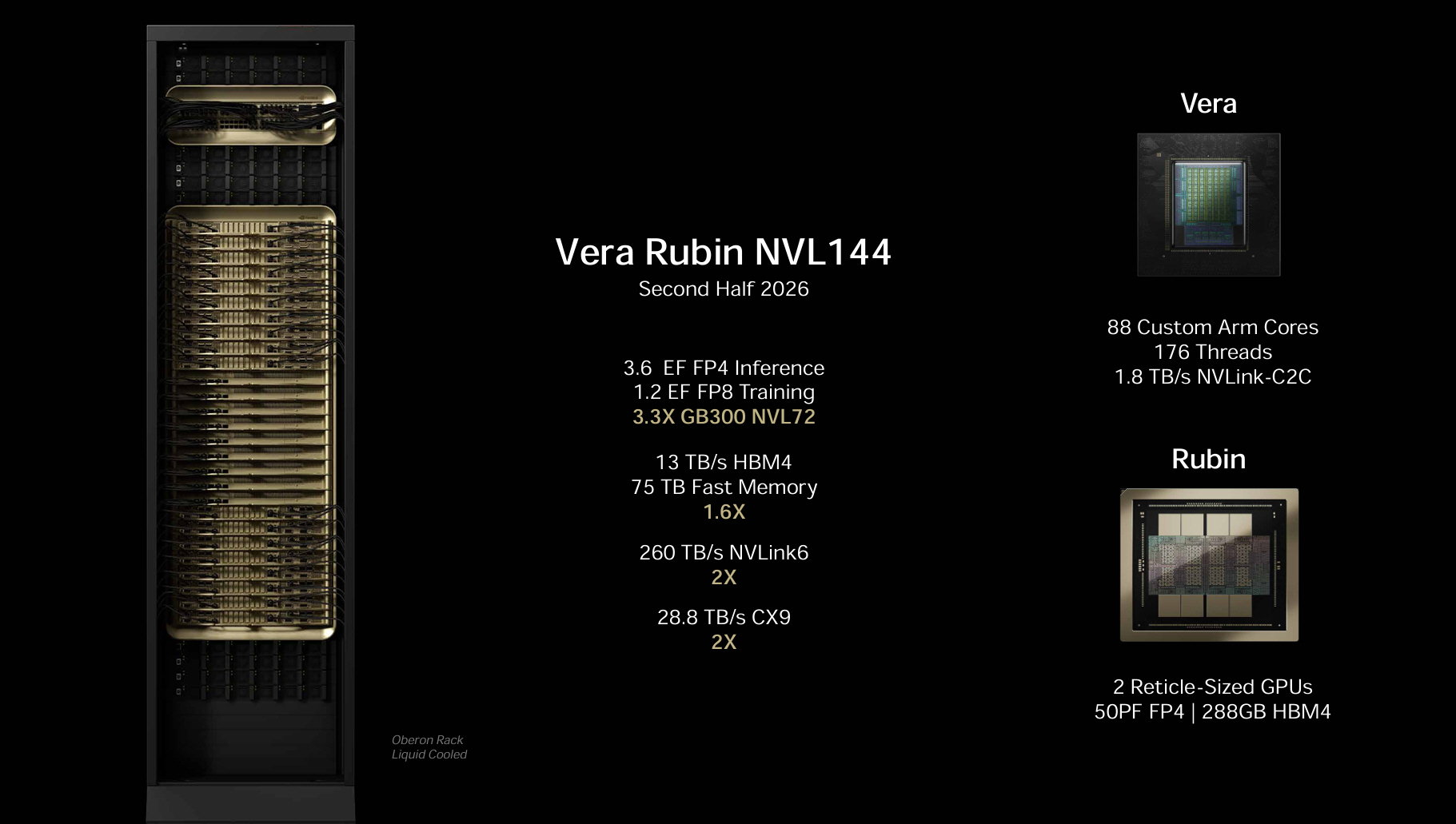

Nvidia Vera Rubin NVL144

AMD Highlights MI350 Series at Hot Chips Event

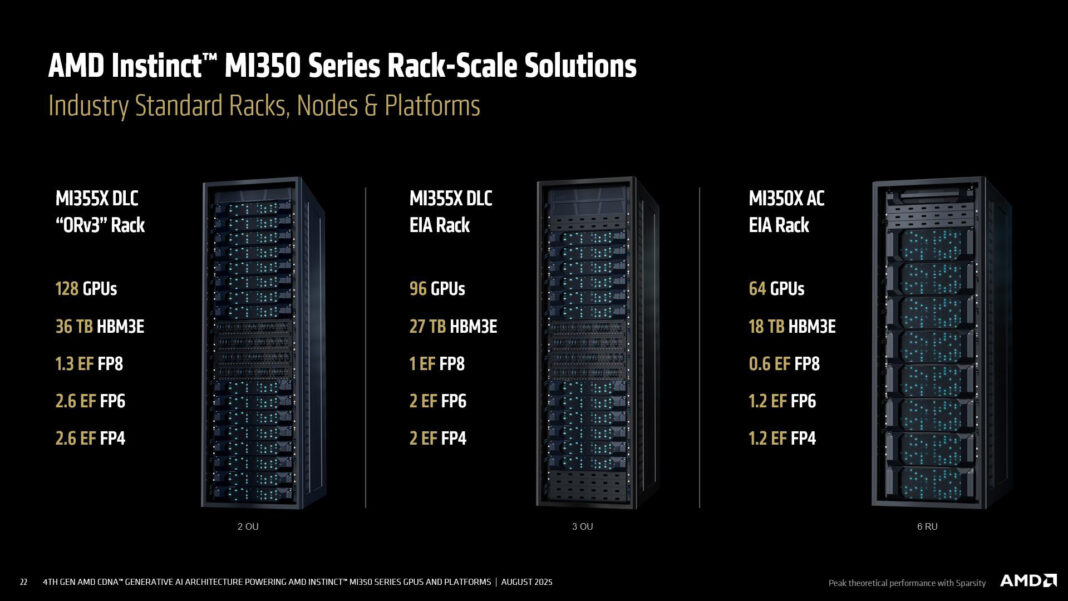

At the recent Hot Chips gathering, AMD focused on its CDNA 4‑based Instinct MI350 lineup, emphasizing the ability to scale from individual nodes to full racks. The MI350 platforms combine 5th Gen EPYC CPUs, MI350 GPUs, and AMD Pollara NICs in OCP‑standard chassis, with bandwidth delivered via Infinity Fabric at up to 1,075 GB/s.

MI355X DLC Rack Specifications

The centerpiece of AMD’s announcement is the MI355X DLC rack, designated “Orv3.” This two‑unit‑wide (2 OU) system integrates 128 MI355X GPUs, each paired with HBM3e memory, resulting in a total of 36 TB of high‑bandwidth memory. The rack is rated for a peak throughput of 2.6 exaflops at FP4 precision, while a smaller 96‑GPU “EIA” variant offers 27 TB of HBM3e.

Node‑Level Performance and Cooling Options

AMD showcased flexible node designs that support both air‑cooled and liquid‑cooled configurations. An MI350X node with eight GPUs achieves 73.8 petaflops at FP8, and the liquid‑cooled MI355X version pushes that figure to 80.5 petaflops at FP8 within a denser footprint.

Roadmap Outlook: MI400 and Beyond

Looking ahead, AMD confirmed that the MI400 accelerator, expected in 2026, will deliver up to 40 petaflops of FP4 performance, 20 petaflops of FP8, 432 GB of HBM4 memory per GPU, 19.6 TB/s of bandwidth, and 300 GB/s of scale‑out capability. The company’s product cadence shows an accelerated performance curve from the MI300 through the MI400 generation.

Comparative Glance at Nvidia’s Vera Rubin Plans

While AMD’s MI355X rack is a current product, the company also referenced Nvidia’s upcoming Vera Rubin architecture slated for 2026–27. Nvidia’s Vera Rubin NVL144 system, projected for the second half of next year, is advertised at 3.6 exaflops FP4 inference and 1.2 exaflops FP8 training, with 13 TB/s of HBM4 bandwidth and 75 TB of fast memory. Longer‑term plans include a Rubin Ultra NVL576 system targeting 15 exaflops FP4 inference and 5 exaflops FP8 training, backed by 4.6 PB/s of HBM4e bandwidth and massive interconnect resources.

Strategic Implications

The AMD announcement underscores the company’s push to provide AI‑ready infrastructure that can compete with Nvidia’s roadmap. By delivering a production‑ready rack with 128 GPUs and exaflop‑scale performance, AMD positions itself as a viable alternative for data‑center operators seeking high‑throughput AI workloads.

Source: techradar.com